Talk:Search Odds/Archive3

|

Archive Page |

| This page is an archive page of Talk:Search Odds. Please do not add comments to it. If you wish to discuss the Search Odds page do so at Talk:Search Odds. |

Initial Ammo Level of Firearms

--Barcalow 18:06, 1 Nov 2005 (GMT)

Ratio of Bullets/Shells Found

After I excised the "four times as likely" comment on the "shots found" part of the Comments/Analysis section, it was put back. Why? We have plenty of data, and that data indicates that Clips are at least as common as Shells - and at 6 shots per Clip and 1 per Shell... --LouisB3 22:18, 13 Nov 2005 (GMT)

- I was also counting the shots that you get when you find loaded pistols and shotguns. Assuming a random distribution of shots in a gun when found, that's 1 extra shell for every shotty and 3 extra bullets for every pistol. So, searching 100 times in a police department, using today's Search%s, you find:

- 1.77 pistols --> 5.31 shots

- 7.17 clips --> 43.02 shots, 48.33 pistol shots total

- 1.54 shotguns --> 1.54 shots

- 7.07 shells --> 7.07 shots, 8.61 shotgun shots total

- and 48.33/8.61 is... well, hey, about 5.6. Either I made a mistake, or our numbers were different when I did this last. --Biscuit 00:14, 14 Nov 2005 (GMT)

Representation of Results

Has anyone else thought that it might be time to make separate pages for the mall stores? Most of them have more data than other building types, and this way, if one of them changes individually (say, the hardware store), we can play around with just that one page. --Biscuit 01:42, 19 Nov 2005 (GMT)

- Yeah, they are getting a bit big... especially the "Good stuff" stores -- Juntzing 13:09, 24 Nov 2005 (GMT)

- I actually noted this on that Mall Data talk page, but yes, I agree. I think it should be Okay to split them just by store (as opposed to giving every table it's own data page). I'll start doing that now... -- Odd Starter 00:40, 26 Nov 2005 (GMT)

- Aaaand all done. The pages should be hunky dory now, though people should feel free to make minor adjustments as needed. -- Odd Starter 01:01, 26 Nov 2005 (GMT)

- Beautiful! Nicely done, again! --Biscuit 04:29, 28 Nov 2005 (GMT)

- Aaaand all done. The pages should be hunky dory now, though people should feel free to make minor adjustments as needed. -- Odd Starter 01:01, 26 Nov 2005 (GMT)

Confidence Intervals

I have been researching the methods for generating 'confidence intervals' for the Search Odds data. I'm not sure if anyone is really interested in this, but since I did look into it I thought I'd report my results here. There are several ways to approach this problem, and I would like to present the method which I consider to be the best.

Stuckinkiel 16:17, 28 Nov 2005 (GMT)

Background

We assume that in UrbanDead, the probability P of finding a particular object in a particular building type (for example Newspaper in a Hospital) is fixed (set somewhere in the software). Suppose we have searched a particular building n times, and we found a particular object y times. Using this information, we would like to guess the unknown probability P. Our best guess is y/n, and this is what is reported on the Search Odds pages. I was suprised to find out that this has actually been mathematically proven to be the maximum likelihood solution.

But, how sure are we that this solution is correct? This question is more complicated than it may at first seem. After all, what do we mean by 'correct'? For example: if we computed y/n to be 0.51, can we safely assume that the underlying probability P is 0.50? Obviously, the confidence in our measurement of the correct probability depends on the number of searches we have performed. It also depends, however, on the magnitude of the unknown probability P.

Solution

Fortunately this problem is not new and there is a well described mathematical distribution that can help us compute a solution. The Beta Distribution is a probablility density function used for binomial data that is typically described by two parameters, alpha and beta. Quartiles generated from the appropriate Beta Distribution can be used to compute the range of Search Odds probabilities P that most likely fit the data that we observed. For example, if we want to be 90% sure that the unknown probability P lies within a particular range, the range can be computated as follows:

- Compute alpha: alpha = y + 1

- Compute beta: beta = n - y + 1

- Compute the value at quartile 0.05

- Compute the value at quartile 0.95

(note, the region between quartiles 0.05 and 0.95 accounts for 90% of the distribution)

Computing the values of the quartiles is not trivial and is probably best left to a computer program.

- In the statistical package "R", the function qbeta() returns the quartile.

- In the Math::CDF module of the Perl scripting language, a function of the same name, qbeta(), can be used.

- There are also several online applications which do this, one of which is http://www.causascientia.org/math_stat/ProportionCI.html

- It seems (from images posted) that other participants on the Search Odds pages used Microsoft Excel to run statistical analysis. I looked (admittadly briefly) for a qbeta() function for Excel, but could not find one which was free.

Examples

n=50, y=100 alpha=51, beta=51 qbeta(0.05, 51, 51) = 0.4189 qbeta(0.95, 51, 51) = 0.5811 so, if we find 50 after searching 100 times, most likely P=0.5 and we can be 90% sure that 0.4189 < P < 0.5811

n=500, y=1000 alpha=501, beta=501 qbeta(0.05, 501, 501) = 0.4740 qbeta(0.95, 501, 501) = 0.5260 so, if we find 50 after searching 100 times, most likely P=0.5 and we can be 90% sure that 0.4740 < P < 0.5260

n=10, y=1000 alpha=11, beta=991 qbeta(0.05, 11, 991) = 0.0062 qbeta(0.95, 11, 991) = 0.0169 so, if we find 50 after searching 100 times, most likely P=0.01 and we can be 90% sure that 0.0062 < P < 0.0169

Note: This is NOT a 'confidence interval' in the traditional statistical sense. This is a computation based on Bayesian statistics and tells us which 'models' best fit the data which was collected. If you are curious as to why one should use the Bayesian method, consider reading chapter 37 (pages 457-67) in David McKay's 'Information Theory, Inference, and Learning Algorithms" which is available for download at no cost. The rest of this book is too complicated for me, however I did find chapter 37 to be worthwhile. It is also probably of general interest to note that the values generated by this method are not much different from those generated by a classical 'confidence interval' computation.

Statistical Analyses

Understanding these analyses

I would be remiss in my duties as a statistician if I did not point people to various resources that should help in understanding what I'm writing down.

The exact test I'm using is known as Pearson's Chi Square test, or, simply, a Chi Square. This is among the simplest inferential statistics tests available, and can very easily be done in an excel environment with minimal issues.

For a brief course on chi squares, there is a quite useful online textbook available for free, known as SticiGui. SticiGui's Chapter on chi square analysis is quite useful, and is reasonably easy to read. Further, If you get stuck, SticiGui has a very nice hypertext interface, so you can read up on any statistical concept you're not aware of.

For those that just get hopelessly lost in statistics, Audience Dialogue, an Audience research site, has a nice page full of useful links for the non-statistician. Most of this is aimed more at getting a good grasp of descriptive statistics, as opposed to the inferential statistics that I'm doing below. Still, a good grasp of descriptive statistics can only help you to step up to the scary world of inferential statistics. From that page, Statistics for Writers is a very nice, non-geek guide to descriptive statistics, and the pitfall therein.

As a note, when I say inferential statistics, what that really means is using statistical analysis to prove that a behaviour can be generalised beyond the data that we're looking at. Descriptive statistics is less bold in it's intent - Descriptive statistics merely tell us stuff about the data we're looking at, and we can't use it to make statements about the population we've taken the data from.

For those wishing to replicate my analyses, and for those who want to see me try and explain what the hell I'm doing, I've created a tutorial page, at /chi square tutorial for excel

Confirmation of Hypothesised Drug Store (Bargain Hunting) Distribution

While I've not done a true confidence interval, I decided to borrow a statistics book for a refresher on statistical methods. I was right - Pearson's Chi Square test is exactly what's needed for hypothesis testing on these statistics. For my first trick, I shall do the simplest of our distributions - the Drug Store, for bargain hunting. I'll not describe this too intently - The webpage provided should give a basic idea of the test, and I'll give my numbers using the variables described there.

In any hypothesis test, we first need a hypothesis. Thus, I am testing to see whether the data we have collected significantly deviates from following fractions:

| Category | Fraction |

|---|---|

| First Aid Kit | 0.35 |

| Nothing | 0.65 |

We have sample sizes of 916 for our Bargain hunting data, and 293 for No Bargain Hunting data. With this, we can build our Frequency table:

| Frequency | First Aid Kit | Nothing | Total |

|---|---|---|---|

| f(o) | 311 | 605 | 916 |

| f(e) | 320.6 | 595.4 | 916 |

Our chi square formula is:

χ^2 = sum(((f(o)-f(e))^2)/f(e))

So, plugging in our values:

- χ^2 = (((311 - 320.6)^2)/320.6) + (((605 - 595.4)^2)/595.4)

- χ^2 = (((-9.6)^2)/320.6) + (((9.6)^2)/595.4)

- χ^2 = (92.16/320.6) + (92.16/595.4)

- χ^2 = 0.287 + 0.155

- χ^2 = 0.442

That's incredibly low, especially considering that a perfect match is 0. Even on the most charitable significance level, we're not even close to saying that our data doesn't fit. We would, thus, be justified in claiming that our expected fractions are quite close. We could even play around with the fractions, seeing if any other viable fraction gives us a number closer to 0:

- 25/75 = 39.15

- 30/70 = 6.812

- 35/65 = 0.442

- 40/60 = 13.96

- 45/55 = 45.174

As a note, on all but 30/70, we blow every significance test (ie, the frequencies and data don't match), and on 30/70, only the most stingy test lets us use it. Although it's the closest to 0, we should note that the probability that such a result indicates correctness is 0.5061 [1] It seems that 35/65 is our correct distribution. -- Odd Starter 11:14, 30 Nov 2005 (GMT)

- Sounds all very interesting to me even though I don't get it all ^_^ We'll let you crunch the numbers. Good job.

- ----------------

- Odd Starter, first I would like to commend you on your work with the Urban Dead statistics. These pages are a truely useful resource to all players, and should be duly appreciated as such. Thank you for taking the time to present your analysis methods on these pages. Everyone who has been working, collecting and analysing this data has been doing a great job. Kudos!

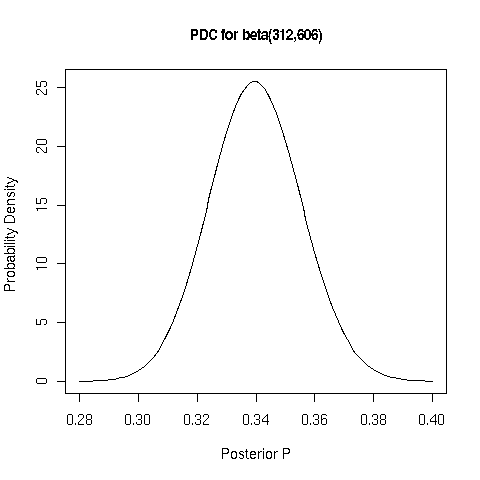

- I would like to make some observations on the Chi squared statistics performed in the example above, and make a case for adding confidence intervals to this analysis. I agree that the statistical analysis above is correct, and does reveal some of the underlying mechanisms governing bargain hunting searches in drug stores. I would like to show here that examining the beta distribution that I described above (in the Confidence Intervals section) may shed even more light on the underlying probability. Using the drug store search data, I computed the alpha and beta values of the beta distribution (312, 606 respectively) and was able to generate the following probability density curve:

- From this distribution, one can see that the maximum likelihood posterior probability is very close to 0.34. In fact, if we do a Chi squared test on 34/66 we get value of 0.0009, which has an associated p-value of 0.9755. This is not surprising. Given the data, the fraction that will generate the lowest Chi squared value is 311/605 (posterior P = 0.33952). So, if we ask the question "What is the probability of finding a first aid kit in a drug store with bargain hunting?", our best answer (given the data we have collected so far) is "0.33952 more or less". Given that the actual probability is governed by some line of code in some script on the urban dead server, we might surmise that the 'real' value is 0.35, but it could just as well be 0.34, or 0.33952, or 0.3333 for that matter. The next question we would probably want to ask is, "What do you mean '0.33952 more or less', how much 'more or less' are we talking about here?" To answer that question, we can generate a confidence interval. For example, we could say "the chance of finding a first aid kit is 0.34 +/- 0.02" with 80% confidence that this is correct. (I used qbeta(0.1,312,606) and qbeta(0.9,312,606) to compute this.) As more data is collected, this estimate will get better and better.

- Confidence intervals in this case are a big help because they tell us how reliable the reported probabilities are. After all 0.34 +/- 0.02 and 0.34 +/- 0.20 lead us to very different expectations. But just talking about this is academic unless it is actually applied. I am currently working on some scripts that automate the computation of confidence intervals from the search pages. I will be happy to share these with anyone who is interested. And anyway, I think we are all having fun playing with this data.

- Stuckinkiel 14:19, 2 Dec 2005 (GMT)

- I admit that I'm using a little extra knowledge that restricted the choice of possible ratios tested. I've made the assumption that most if not all probabilities will work on multiples of 5, rather than on other, more arbitrary possibilities. This is primarily due to the fact that I know a human invented this system, and would be likely to build a system that's "human-readable", of which 5-multiples are a standard feature. Thus why I chose 35/65 instead of the closer 34/66. -- Odd Starter 06:10, 4 Dec 2005 (GMT)

- Also, admittedly, I'm a tad lost on confidence intervals. I know computing them is basically computing mean +/- 2 Standard deviations, and then determining what actually does fit between those data points, but the stats book I've been revising on doesn't seem to help me much, and using SPSS to generate the confidence intervals for all the data would mean I'd still have no idea... -- Odd Starter 06:24, 4 Dec 2005 (GMT)

- Actually, the thing that I calculated is not technically a confidence interval. I think the proper name is 'probability interval'. So, we can say that there is an 80% probability that the "true" chance of finding a FAK is between 0.32 and 0.36. This is not the same as a confidence interval, which says that IF the true chance of finding a first aid kit is (say) 0.34, AND if we sampled this data set many, many times, our value would be within the confidence interval (say) 80% of the time. Although these sound very similar, they are actually fundimentally different things and have a lot to do with the on-going split/controversy between the "Bayesian" and "frequentist" philosophies in statistics. The text book you were looking at is most probably a frequentist text book, and therefore doesn't mention Bayesian analytical methods. Also, because this statistic is basically a descriptive statistic (What is the chance of finding a FAK?), there is no real hypothesis to test and frequentist statistics does not have much to say. At this point it probably sounds like I'm some kind of Bayesian statistics fanatic. I should make it clear that I'm not. I believe that one should be aware of both types of analysis and apply them each appropriately. I am actually a relative beginner when it comes to Bayesian methods, and I am just having fun trying to apply them to the Urban Dead data sets.

- Stuckinkiel 13:33, 6 Dec 2005 (GMT)

Rejection of Separation of Church and Cathedral

For my next trick, we'll show that the distributions of Church data and Cathedral Data, are almost certainly the same distribution - that precisely the same odds exist in both area.

This also uses a Chi-square analysis, although a different type. Learning how to do this sort of test is fairly easy to find through google, so I'll leave the major issues to the reader.

Our hypothesis, as a note, is that the Church and Cathedral values are different in some way. We are actually testing the inverse of this, but this doesn't really matter. We state our hypothesis in such a manner as to make a claim against the status quo.

With our hypothesis stated, we get started. First up, we summarise our observed values in both data sets, as follows:

| f(o) | First Aid Kit | Crucifix | Wine | Nothing | Total |

|---|---|---|---|---|---|

| Church | 9 | 12 | 6 | 133 | 160 |

| Cathedral | 12 | 14 | 7 | 161 | 194 |

| Total | 21 | 26 | 13 | 294 | 354 |

Again, we'll need to identify our expected values, although this time we calculate them from the column and row totals, as we're directly comparing the two sets, instead of to a predetermined value. We compute our values (rounded down to three decimal places) as follows:

- f(e) = ((column total)*(row total))/(grand total)

Following this, we get:

| f(e) | First Aid Kit | Crucifix | Wine | Nothing | Total |

|---|---|---|---|---|---|

| Church | 9.492 | 11.751 | 5.876 | 132.881 | 160 |

| Cathedral | 11.508 | 14.249 | 7.124 | 161.119 | 194 |

| Total | 21 | 26 | 13 | 294 | 354 |

Using the same test equation we used previously (χ^2 = sum(((f(o)-f(e))^2)/f(e))), we compute each cell to collect the following:

| diff | First Aid Kit | Crucifix | Wine | Nothing | Total |

|---|---|---|---|---|---|

| Church | 0.025453995 | 0.005258583 | 0.002629292 | 0.000105932 | 0.033447802 |

| Cathedral | 0.020992986 | 0.004336976 | 0.002168488 | 8.73668E-05 | 0.027585816 |

| Total | 0.046446981 | 0.009595559 | 0.00479778 | 0.000193299 | 0.061033618 |

And so, looking at the bottom-right corner of our computation table (our total of totals), we get our χ^2 value:

- χ^2 = 0.061033618

Which, when you look at it, is pretty damn small. Small enough that not even the stingiest of significance values will even consider letting us use this (the smallest, as a note that we could use at our degrees of freedom is 6.25). Looking up the probability of such a result indicating that the two data sets are the same is 0.9960. Thus, it is proven that Cathedral and Church Data are in fact part of the same data set. -- Odd Starter 13:08, 30 Nov 2005 (GMT)

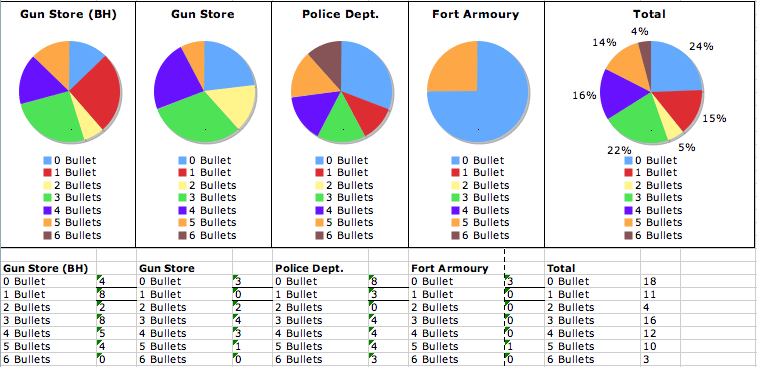

Do Ammo found levels differ between locations?

Using the process for our last set, we can try and find out whether the level of ammunition found in guns differs significantly between locations.

This being our hypothesis, we again set up our observed data tables. We have two this time, since we need to test our shotgun separately from our pistols, as follows:

|

| ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

Then come up with our expected frequencies, using f(e) = ((column total)*(row total))/(grand total) for each table:

|

|

Once again, compute our difference values:

|

|

And looking in the bottom-right corner of each table, we get our two χ^2 values, 18.161 for pistols, and 9.022 for shotguns. Since we're comparing large numbers of categories, the largeness of these number may not necessary mean there's differences in data. if we accept a 1/10 chance that we could be wrong, at our degrees of freedom for each group (18 for Pistols, and 6 for Shotguns), we'd have to find χ^2 values at 25.99 and 10.64 respectively for us to claim that there's any significant difference. In fact, we come up short on both. The actual probability that these results occur by chance within the same data set are 0.4451 for the pistol data and 0.1723 for the shotgun data. So, it's not a good fit at all, but still not bad enough for us to claim that these number prove different data sets for each location. -- Odd Starter 00:19, 1 Dec 2005 (GMT)

Necrotech Buildings - Before and After

Before books were being found in Necrotech buildings, we had a pretty clear indicator that each item had equal odds of being found. This was found after a good 2000 searches. But what about now, with only 300 searches? Can we get an answer now?

In fact we can. First, we'll confirm our suspicions regarding the pre-book Necrotech probabilities, then move to post-book. For the sake of clarity, I've chosen to exclude the results between the 18th of November and the first search that contained a book, since we can't actually be certain of when the book entered the Necrotech probability table.

Before the Book

So, our hypothesis is that, given a successful search, GPS Units, Syringes and DNA Extractors were equally likely to be picked from the list. This makes our hypothesised probability distribution as follows:

| Category | Search % | Found % |

|---|---|---|

| Syringe | 6.67% | 33.33% |

| DNA Extractor | 6.67% | 33.33% |

| GPS Unit | 6.67% | 33.33% |

| Fail | 80.00% |

So, we load up our table:

| Syringe | DNA | GPS | Fail | total | |

|---|---|---|---|---|---|

| f(o) | 114 | 132 | 139 | 1581 | 1966 |

| f(e) | 131.0666667 | 131.0666667 | 131.0666667 | 1572.8 | 1966 |

| diff | 2.222312648 | 0.006646321 | 0.480196677 | 0.04275178 | 2.751907426 |

Thus, χ^2 = 2.751907426. After embarking on this, it turns out that's rather high, only a 48% chance of matching our distribution (quite high, as a note, but still, not the best fit). As we can see, the main issue is the Syringes - they seem to be at a considerably lower distribution than the rest.

So, I decided to try a different distribution. Instead of all three being perfectly even, we make Syringes 5% lower than the other two. So:

| Category | Search % | Found % |

|---|---|---|

| Syringe | 6.00% | 30.00% |

| DNA Extractor | 7.00% | 35.00% |

| GPS Unit | 7.00% | 35.00% |

| Fail | 80.00% |

The table looks as follows:

| Syringe | DNA | GPS | Fail | total | |

|---|---|---|---|---|---|

| f(o) | 114 | 132 | 139 | 1581 | 1966 |

| f(e) | 117.96 | 137.62 | 137.62 | 1572.8 | 1966 |

| diff | 0.13293998 | 0.229504432 | 0.013838105 | 0.04275178 | 0.419034297 |

In this case χ^2 = 0.419034297 has a much higher probability, about %93.62 chance of being correct. It seems our original hypothesis was close, but not correct.

After the Book

Although our intuitions were incorrect about the pre-book distribution, could they be right about the post-book distribution? We have less data to work off of, but we have more than enough to perform a good analysis.

To start off with, our hypothesised distribution:

| Category | Search % | Found % |

|---|---|---|

| Syringe | 5.00% | 25.00% |

| DNA Extractor | 5.00% | 25.00% |

| GPS Unit | 5.00% | 25.00% |

| Book | 5.00% | 25.00% |

| Fail | 80.00% |

So, our table looks as follows:

| Syringe | DNA | GPS | Book | Fail | total | |

|---|---|---|---|---|---|---|

| f(o) | 15 | 17 | 15 | 14 | 266 | 327 |

| f(e) | 16.35 | 16.35 | 16.35 | 16.35 | 261.6 | 327 |

| diff | 0.11146789 | 0.025840979 | 0.11146789 | 0.337767584 | 0.074006116 | 0.660550459 |

It looks exceptionally promising, our total χ^2 = 0.660550459. The probability of such a score, with the number of categories that we have, is 95.61%. There's not that much data, so there is likely a lot of error still. But in lieu of any other evidence, this seems to be a much better bet. Only more data will tell if this pattern continues. -- Odd Starter talk | Mod 04:08, 6 Dec 2005 (GMT)

- It might be fun to do a power analysis using sample size N, to find out just how big of a frequency difference we could expect to detect with this test. It would probably also be useful when looking at the Church/Cathedral and the Ammo examples as well. I would really like to do this, but my boss is piling more work on my desk as I write this. I'll have to get around to this when I can.

- Stuckinkiel 13:33, 6 Dec 2005 (GMT)

Find more with the lights on?

So, now that the generators have come into play, we've got an interesting question - does having the lights on have any effect on Search probabilities? It's certainly plausible, but is it correct?

We should have just enough data to test this, thanks to a spurt of reporting in the Hospital data. Typically, a Chi Square test generally wants enough data so that the expected distribution gives a score of at least 5 in each category. We have that (just), so let's get stuck into this.

Our Hypothesis that we are testing is that there is some difference between search probabilities when lights are on, and when they are off. Simple as that.

We show our observed data:

| f(o) | First Aid Kit | Newspaper | Fail | Total |

|---|---|---|---|---|

| Lights on | 24 | 10 | 116 | 150 |

| Lights off | 13 | 5 | 59 | 77 |

| Total | 37 | 15 | 175 | 227 |

And from this, we calculate our expected data:

| f(e) | FAK | News | Fail | Total |

|---|---|---|---|---|

| Lights on | 24.449 | 9.912 | 115.639 | 150 |

| Lights off | 12.551 | 5.088 | 59.361 | 77 |

| Total | 37 | 15 | 175 | 227 |

And, again, we work our chi square magic to produce our difference scores:

| diff | FAK | News | Fail | Total |

|---|---|---|---|---|

| Lights on | 0.008258126 | 0.000783162 | 0.001128425 | 0.010169713 |

| Lights off | 0.016087258 | 0.00152564 | 0.00219823 | 0.019811128 |

| Total | 0.024345384 | 0.002308802 | 0.003326654 | 0.029980841 |

Our total, χ^2 = 0.029980841, is exceptionally low. Low enough, in fact, that our probability that these two groups come from the same data set is 98.51%. From this, we can pretty safely conclude that the lights being on probably has no effect on search probabilities at all. -- Odd Starter talk | Mod 08:43, 8 Dec 2005 (GMT)

Power analysis of the 'lights on' problem

I thought it would be enlightening to do a power analysis of the above problem to quantify our chances of detecting a difference between the number of first aid kits found in hospitals with and without running generators. There are plenty of statistics text books that describe how to do this, but I often use this handy reference when dealing with these kinds of problems at work. It's easy to read and provides lots of details on how to do power analysis on the Χ² and many commonly used statistical models. Plus, the authors provide a computer program so that you don't have to do all the statistics yourself, by hand. The paper is written from the perspective a clinical medical trials, but the Χ² statistics are the same for our UrbanDead searches.

Power and Sample Size Calculations: A review and computer program William D. DuPont and Walton D. Plummer Jr. Controlled Clinical Trials 11:116-128(1990)

In the above example, the probability of finding a first aid kit was almost identical whether a generator was running or not. But, a total of only 227 searches (150 lights on, 77 lights off) were conducted. Is that enough? We might ask ourselves, "Let's assume that Kevan added a 5% to our chance of finding a first aid kit with the lights on. What are our chances of detecting this difference in 227 searches?"

In the dichotomous test section of the program provided by DuPont and Plummer, we select 'Power' and plug in the following numbers:

| variable | value | description |

|---|---|---|

| α | 0.05 | the probability of a type I error (finding a difference when there is none) |

| n | 227 | the number of searches |

| p0 | 0.16 | the null hypothesis, probability of finding a first aid kit with the lights on (24/150) |

| p1 | 0.21 | the alternate hypothesis (p0 + 0.05) |

| m | 1.948 | the ratio between searches with lights on and off (150/77) |

The result is 1-β = 0.3655, that means that if the difference between search probabilities is 5%, we have only a 37% chance of detecting this difference, given the number of searches we have performed. In other words, there is a 63% chance that we would miss a 5% difference (if one exists). Note: power is defined as 1-β, where β is the probability of a type II error (the probability of finding no difference when there is one).

Another interesting question we might ask is, "How many searches to we have to do so that we can be sure the probabilities differ by more than 5%"? Here, we have to define what we mean by 'sure'. Say that we are happy with a type II error of 10%. We select 'Sample Size' plug in the following numbers:

| variable | value | description |

|---|---|---|

| α | 0.05 | the probability of a type I error (finding a difference when there is none) |

| power | 0.90 | the probability of NOT having a type II error (1 - 0.10 = 0.90) |

| p0 | 0.16 | the null hypothesis, probability of finding a first aid kit with the lights on (we assume our first estimate was correct) |

| p1 | 0.21 | the alternate hypothesis (p0 + 0.05) |

| m | 1 | the ratio between searches with lights on and off (we assume they will be equal) |

The result is n = 1266. That means we would have to do 633 searches in each type of hospitals (lights on and lights off) before we can be 90% sure that we have not failed to detect the rather small search bonus of 5%.

With tools like this on hand for power analysis, there are plenty of interesting questions we might ask:

- How much do we need to search to detect a 10% difference in search efficiency? A 1% difference?

- Is it better to search all buildings equally? What if we search one type of building twice as often as another?

- How sure of the answer do we want to be? What if we reduce the power to 80% or increase it to 95%?

As if I weren't spending too much time already playing UrbanDead, now I have to worry about the efficiency of my search strategy as well! Thanks a lot, and Happy Holidays! ^_^

Stuckinkiel 15:38, 19 Dec 2005 (GMT)

Addendum: Necrotech Lights on problem

Since the previous attempt, there's been quite a lot of activity on the Necrotech set. We're probably not quite at optimum numbers for a certain analysis, but we have enough numbers to run this stuff through again.

Listing the tables as they were when I did the calculations:

| f(o) | Rev. Syringe | DNA Ex. | GPS Unit | Book | Nothing | Total |

|---|---|---|---|---|---|---|

| Lights off | 30 | 31 | 26 | 32 | 471 | 590 |

| Lights on | 38 | 48 | 37 | 47 | 697 | 867 |

| Total | 68 | 79 | 63 | 79 | 1168 | 1457 |

| f(e) | Rev. Syringe | DNA Ex. | GPS Unit | Book | Nothing | Total |

|---|---|---|---|---|---|---|

| Lights off | 27.53603294 | 31.99039121 | 25.51132464 | 31.99039121 | 472.97186 | 590 |

| Lights on | 40.46396706 | 47.00960879 | 37.48867536 | 47.00960879 | 695.02814 | 867 |

| Total | 68 | 79 | 63 | 79 | 1168 | 1457 |

| diff | Rev. Syringe | DNA Ex. | GPS Unit | Book | Nothing | Total |

|---|---|---|---|---|---|---|

| Lights off | 0.220479604 | 0.030661543 | 0.00936069 | 2.88614E-06 | 0.008220852 | 0.268725576 |

| Lights on | 0.150038024 | 0.02086541 | 0.00637002 | 1.96404E-06 | 0.005594352 | 0.182869769 |

| Total | 0.370517628 | 0.051526953 | 0.01573071 | 4.85018E-06 | 0.013815204 | 0.451595345 |

Our resultant Chi Square, 0.451595345, resolves to a probability of 97.8% that the data we're seeing could have been the result of sheer chance. Even if our numbers aren't quite high enough for full proof, it's worth noting that result on this sort of level are almost certainly indicative of there being absolutely no difference between lights on and lights off. We might expect some controversy if, say, the numbers had only a 50% probability of fit, but at 97.8% probability, it seems pretty clear. -- Odd Starter talk | Mod 13:58, 9 Jan 2006 (GMT)

Addendum to the Addendum: Necrotech Lights on problem

I am concerned that we are being a bit enthusiastic with our assertion that the search odds are really the same with the lights on and the lights off. I believe the 97.8% probability mentioned above is being slightly misconstrued. The Chi-squared test gives us a measure of so-called "type I error" (the probability that we see a difference when there is, in fact, none.) This tells us a lot when we find a significant difference, but is much less useful when we do not. This is why statisticians never 'accept the null hypothesis', but at best can only 'fail to reject' the null hypothesis. In other words, if we had low P-values (< 0.05), it would be an indication that the search probabilities are different, but it is NOT the case that high P-values (for example 0.978) indicate that search probabilities are the same. To measure "type II error", (the probability that we see no difference when there is, in fact, one) we have to do a power calculation. If we choose a power of 0.978 for our test, and if we focus on finding a syringe with lights on vs. lights off with the 590/867 searches we have - we can be 97.8% 'sure' that the probability of finding a syringe with lights off is between 14% and 31%, compared to 22% with lights on. Again, if we assume that Kevan added a 5% to our chance of finding a first aid kit with the lights on, we would probably not (at a power of 97.8%) be able to detect it with a sample size of 590/867.

I'm not trying to be a wet blanket or anything, I just don't want us to prematurely declare that there is no difference between search odds, when in fact there could be.

Stuckinkiel 10:44, 13 Jan 2006 (GMT)

Missing buildings: Car Parks

We currently have no records of Car Parks, which is a fuel can location. Any chance we can get some searchs from them? I should be able to do some tomorrow. --GoNINzo 18:33, 24 Jan 2006 (GMT)

- I've set up a page for Car Park Data. Fill it as you desire :) -- Odd Starter talk | Mod 02:12, 25 Jan 2006 (GMT)

- Link to Car Park Data. and Thanks! --Tycho44 06:52, 8 Feb 2006 (GMT)

- Besides, there is nothing in carparks anyway...

- Link to Car Park Data. and Thanks! --Tycho44 06:52, 8 Feb 2006 (GMT)

Books

I've been carrying around a bunch of books and I've finally decided to sit down and read them. To document my activities I have created a new Book page. Does anyone have any objections to this 'non-traditional' search location being under the Search Odds pages? -- Stuckinkiel 09:32, 26 Jan 2006 (GMT)

- I like this idea as well! This data should be capatured somewhere...--GoNINzo 18:50, 26 Jan 2006 (GMT)

- Err, there's already a page for this that I set up some time ago - You can find it at Book Odds. Though, I have no objection to moving that page somewhere else. -- Odd Starter talk | Mod 10:28, 28 Jan 2006 (GMT)

- Okay, I have moved my data to the Book Odds page and deleted the other page. Are there any links to the Book Odds page? Should a link be put in the Searchnav template? --Stuckinkiel 08:46, 31 Jan 2006 (GMT)

- Why not? Probably worth starting a new line for Miscellaneous Statistics... -- Odd Starter talk | Mod 12:08, 2 Feb 2006 (GMT)

- Okay, I have moved my data to the Book Odds page and deleted the other page. Are there any links to the Book Odds page? Should a link be put in the Searchnav template? --Stuckinkiel 08:46, 31 Jan 2006 (GMT)

- Err, there's already a page for this that I set up some time ago - You can find it at Book Odds. Though, I have no objection to moving that page somewhere else. -- Odd Starter talk | Mod 10:28, 28 Jan 2006 (GMT)